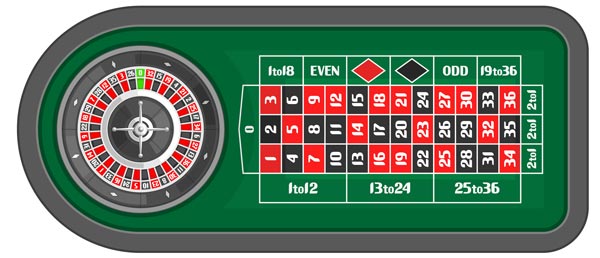

I know I won't make money or a living wage off of gambling, especially roulette. All i'm looking for are some strategies that maximize profits and minimize losses. I study computer science and have an exam coming up in probability/machine learning/ hidden models etc so I thought some light gambling on the roulette wheel counts as studying. In roulette, it is always possible to place a bet on something that has a 50% chance of occurring: even/odd number, red/black tile, 1–18/19–36. The martingale system is as follows: You should start with a small amount, for instance, 1€, and keep making the same bet until you lose. What a Machine Learning algorithm can do is if you give it a few examples where you have rated some item 1 to be better than item 2, then it can learn to rank the items 1. But you still need a training data where you provide examples of items and with information of whether item 1 is greater than item 2 for all items in the training data. To get the best free roulette systems that really work, see the top 5 proven roulette systems and the video series below. It's the best 100% free information for winning roulette you'll find. It's written by professionals who are really earning a living from roulette.

Probability and physics are helping make even roulette seem ultimately predictable.

In his new book,The Perfect Bet: How Science and Math Are Taking the Luck Out of Gambling, Adam Kucharski details how trying to understand dice games led one mathematician to develop probability theory, how one of the first wearable computers was designed to systematically yet covertly predict the fall of a roulette ball, and how poker-playing bots are advancing winning strategies more quickly than we think. As he shows, science, mathematics, and gambling have long been intertwined, and thanks to advances in big data and machine learning, our sense of what's predictable is growing, crowding out the spaces formerly ruled by chance. At the same time, though, we're letting more of our lives be influenced by algorithms, bits of code whose effects are beyond our full understanding. As in so many other areas, the creations are outpacing their creators. In the lightly edited interview below, Kucharski explains how we got here, what poker-playing bots can show us about being human, and what comes next .

In the book you call gamblers the godfathers of probability theory, noting that it's a newer area of mathematics than we might expect. Can you talk a little bit about how probability theory came out of gambling?

One thing that I found remarkable about the history of math is that it's only fairly recently that people started looking in to quantifying luck, so really for a long period of history, topics like geometry were the main study. There was a lot less interest in random events: it's actually not until the 16th century that gamblers start to think of how likely things are and how that could be measured.

There was a gambler called Gerolamo Cardano: although a physician by profession, he had a pretty keen gambling habit. He was one of the first people to outline what's known as the sample space. This is all of the possible outcomes you could get, say, if you're rolling two dice together, there's 36 ways they can land. And then of these 36 ways you can home in on the ones you're interested in. This provided a framework for measuring these kinds of chance events.

That was of the first foundations of probabilitytheory. From that point over the subsequent years, a number of other researchers built on those ideas, again often using bets and wagers to inspire the way they thought about these problems.

You recount several examples of scientists taking on certain gambling problems. Richard Feynman, for example, tells professional gambler Nick the Greek that it seems impossible for a gambler to have any advantage.

Feynman was obviously famous for his curiosity. On his trips to Vegas, he wasn't a big gambler, but he was interested in working out the odds. I think he started with craps, figuring that although it was pretty poor odds, he wouldn't lose that much, and it might be a fun game. On his first roll he lost a load of money, so he decided to give up.

He was talking to one of the showgirls, who mentioned Nick the Greek. He was this famous professional gambler, and Feynman just couldn't work how you could have the concept of a professional gambler because all the games are stacked against you in Vegas. On talking to him he realized what was actually happening was Nick the Greek wasn't betting on the tables. His gambling strategy was making side bets with people around the table. He was almost playing off human flaws and human superstitions, because Nick the Greek had a very good understanding of the true odds.

If he made side bets at different odds he could kind of exploit that difference between the true outcome and what people perceive it to be. That's a theme that continues throughout gambling: If you can get better information about what's going to happen and you're competing with people who don't have much idea about how things are going to land or what the future might be, then that gives you a potentially quite lucrative edge.

There's a sense that being at the whim of chance is somehow a very human position. Admitting things as totally unpredictable and leaving yourself up to fate is often part of the allure for the nonprofessional gambler. But then there's a tension, because other people say maybe these are things that we can predict, and maybe this isn't as much up to chance as we imagine it to be.

It's really been this almost tug of war between believing something is skill and believing something is luck, whether it's in gambling or just in other industries. I think we have a tendency if we succeed at something to think it's skill and if we fail at something to almost blame luck. We just say, 'Wow, that's chance, there is nothing I can do about it.'

The work of a lot of people who study these games is trying to think of a framework within which we can measure where we are in terms of skill and in terms of chance. Mathematician Henri Poincaré was one of the early people, in the early 1900s, interested in predictability. He said that when we have uncertain events, essentially it's a question of ignorance.

He said that there are three levels of ignorance. Depending on how much information you have about the situation and what you could measure, things will appear increasingly random. Not necessarily because it's truly a lucky event, but really it's our perception that makes it appear unexpected.

One of the games that I think we expect to be least predictable is roulette. But as you point out, Claude Shannon, considered the father of information science, and Edward Thorp, who would later write one of the most popular books on card counting, made a strong case for being able to systematically predict roulette spins.

For a long period of time, roulette has almost been like a case study for people interested in random events.Early statistics was honed by studying roulette tables because you had this process that was seen as very complicated to actually understand fully, but if you collected enough data then you could analyze it and try and look for patterns and see whether these tables are truly random.

Your Crown Rewards membership is reviewed every six months on 1 April and 1 October. Your membership tier is based on the number of Status Credits earned in the six months prior to these dates. If you join between renewal dates you can be upgraded to the next membership tier at any time. Crown casino membership tier. Start Earning Points Today You can now start earning points every time you visit Crown. Enjoy the excitement of the casino, stay at one of Crown's world-class hotels or dine at award-winning restaurants simply show your card when you pay and you could earn points straight away.

Edward Thorp, who wrote while he was a Ph.D. physics student, realized that actually beating a roulette table, especially if it's perfectly maintained, isn't really a question of statistics. It's a physics problem. He compared the ball circulating a roulette table to a planet in orbit. In theory, if you've got the equations—which you do because it's a physics system—then by collecting enough data you should be able to essentially solve those equations of motion and work out where the ball is going to land.

The first wearable bit of tech was designed to be hidden under clothing so you could go into casinos and predict where the roulette ball will land.

Although in theory that could work, the difficulty is [that] in a casino, you actually need to take those measurements and perform those calculations to solve those equations of motion while you are there. So Thorpe then talks to Shannon, who was one of the pioneers of information theory and had all sorts of interesting contraptions and inventions in his basement. Thorpe and Shannon actually put together the world's first wearable roulette system computer. The first wearable bit of tech was designed to be hidden under clothing so you could go into casinos and predict where the roulette ball will land.

Those early attempts were mainly let down by the technology. They had a method which potentially could be quite successful. But it was implementing it, for them at that time—that was the big challenge.

You mention, though, a much more successful attempt in 2004.

This is the Ritz casino in London, where you have these newspaper reports of people whose roulette system initially said to have used a laser scanner to try and track the motion of the roulette ball. In the end, they walked away with just over a million pounds.

That's an incredibly lucrative take, even for high-stakes casinos like that. This [attempt] reignited a lot of the interest in these stories because, although Thorp and subsequently some students at the University of California had focused on roulette tables, they'd always left out a bit of their methods. They'd never published all the equations.

Sothere's always this element of mystery and glamour around these processes. Actually, it's only very recently that researchers in Hong Kong actually tested these roulette strategies properly andpublished a paper. It actually said, that if we have this kind of system for roulette it's plausible that we can take into casinos and win. I think there's been a number of other stories of gamblers trying out these techniques in casinos, but it's always been very secretive. It's interesting how long it's actually taken for some researchers to test this problem.

Toward the end of the book you talk about poker. If probability and physics are helping make even roulette seem ultimately predictable, poker seems a tougher nut to crack.

Exactly. Poker, on the face of it, seems like a perfect game for a mathematician because it's just the probability that you getthis card and someone else gets a card. Fun casino no deposit bonus code 2018. Of course, anyone who's played it realizes that it's much more about reading your opponents and working out what they are going to do and what they think you're going to do.

Early research into poker actually inspired a lot of the ideas of game theory: so, everything inA Beautiful Mind, about if players get together and try to optimize their strategy, they'll come up with certain approaches. In more recent years, that link between science and gambling has continued, and actually a lot of the attempts at A.I. are focusing on these kinds of games.

Although historically we've seen games like chess beaten—and more recently, Go—these are what's known as perfect information games. You have everything in front of you while you're playing. So in theory at least there's a set of moves that if you follow them exactly then you always get the optimal outcome.

If you've ever played tic-tac-toe: most people work it out pretty quickly. There's just a set of fixed things that you can do, and that will always force a certain outcome. Whereas with poker, in poker you can't do that because there's an element of randomness. There's hidden information in that you don't know what your opponent's cards are. You've got to adjust your strategy over time, and this is where things like bluffing and manipulating your opponents come into play.

I think for artificial intelligence that's a really interesting challenge, because you've got this hidden information and risk-taking aspects. Arguably, that's a lot closer to a lot of situations we actually face on a day-to-day basis. Whenever you go into a negotiation or you try to bargain for something, you've got information that you know, while they've got information that they know, and you have to adjust your strategy to account for the difference.

So much of our strategizing depends on accounting for having incomplete information. It reminds me ofBill Benter's horse-racing models. He models hundreds of variables to predict how the horses will run, but he also cautions about mistaking correlation for causation, especially when the correlations seem wildly counterintuitive. He's saying don't try to explain the models, as long as they work. That's what often happens with machine learning: we put a machine to work on some problem, feed it massive amounts of data, and it returns with these correlations that we never would have expected. They're totally counterintuitive, but we kind of just have to take them because they're revealingsomething.

I think the approach that these scientific bettors use has been really interesting. One of the questions that I found the most unexpected answer to was when I asked, 'What criteria do you used to make your strategy?' And with Bill Benter and these horse-racing syndicates, they're really just interested in which horses will win. They don't want to explainwhyit will win.They just want a model where if you put in enough information you will get a reliable prediction.

I think that goes against a common notion that somebody who's good at gambling is almost an expert and has a lot of knowledge of the narrative of the sport, whereas actually, a lot of these scientific teams treat it much more like an experiment. As long as it gives a good result, they don't mind how they get there.

And similarly with these bots, because you've got so much complexity in terms of how they learn—they have billions and billions of games against each other. It's very difficult to understand why bots might choose a certain strategy. This even goes back to the early days of machine learning, Alan Turing's question of can machines surprise us? Can they come up with something unexpected? Machine learning is increasingly showing that they can, because they can just learn so far beyond what their creators are capable of.

In many cases, these poker bots are turning up with strategies that humans would never have thought to attempt, because they've simply been able to crunch through that many games, and they've refined their strategies. It's a really interesting development we're seeing in terms of what the minimal amount of information or strategy is that you need to be successful. We really try to identify questions that maybe people wouldn't traditionally ask.

That brings us back to this notion that chance is both something that can't be explained away any further, and yet there's something deeply human about the desire to create a story to explain why things happen. Computers are now showing us strategies and explanations we never could have arrived at on our own; as you say, they're outpacing their creators. What are some of the ramifications of that process?

One of the things that really surprised me in writing the book is how quickly these developments are happening. Even the Go victories this year: I think lots of people didn't expect it to happen that suddenly. And likewise with poker: last year some researchers found the optimal solution for a two-player limit game. Now you got a lot of bots taking on these no-limits stakes games—where you can go all-in, which you often see in tournaments—and they're faring incredibly well.

In many cases, these poker bots are turning up with strategies that humans would never have thought to attempt.

The developments are happening a lot faster than we expected and they're going beyond what their creators are capable of. I think it is a really exciting but also potentially problematic line, because it's much harder to unpack what's going on when you've got a creation which is thinking much further beyond what you can do.

I think another aspect which is also quite interesting is some of the more simple algorithms that are being developed. Along with the poker bots which spend a huge amount of time learning, you have these very high-speed algorithms in gambling and finance, which are really stripped down to a few lines of code. In that sense, they're not very intelligent at all. But if you puta lot of these things together at very short time scales—again, that's something that humans can't compete with. They're acting so much faster than we can process information; you've got this hidden ecosystem being developed where things are just operating much faster than we can handle.

This goes beyond simply teaching bots to play poker or Watson winning atJeopardy!There are wider ramifications.

Yes. And I think the increasing availability of data and our ability to process it and create machines that could learn on their own, in many ways, it's challenging some of those early notions about learning machines. Even some of the criticisms and limitations that Alan Turing put forward when they were first coming up with these ideas, they're now being potentially surpassed by new approaches to how machines could learn.

You have these poker bots, instead of learning to play repeatedly, they're developing incredibly human traits. Some of these bots, people just treat them like humans: they refer to them in human terms because they bluff and they deceive and they feign aggression. Historically, we think of these behaviors as innate to our species, but we're seeing now that potentially these are traits you could have with artificial intelligence. To some extent it's blurring the boundaries between what we think is human and what's actually something that can be learned by machine.

Roulette Machine Learning Game

Machine learning (ML) and AI are still new. While the growth of open source tools and online courses have made them accessible to a large number of users, it's still hard to get them right. The growing population of novice users accessing these powerful tools means that many projects will fail.

So what questions should you ask yourself at the beginning to avoid having things go wrong?

Does it really solve a business problem?

Or is it just an opportunity to play with some cool technology? With exciting new technologies like Deep Learning for Computer Vision, the results are so cool that it's easy to get caught up in the technology itself rather than focusing on the value being provided.

What is 'good enough'?

While we may know what business problem we're tackling, we must also know what results are minimally sufficient. Is it reasonable to expect that we can achieve these results? If we need to get 99.9% accuracy before any value is provided, then perhaps this is not the right problem.

It's often the case that we're trying to automate some routine work that is currently being done by people. One often ignored benefit of applying ML to a project is the need to measure performance. As part of this effort, it is essential that we get a baseline from the humans currently executing the process. We should compare against this baseline rather than perfection.

Does the customer have reasonable expectations? Even if the resulting system is accurate enough to provide value, the customer may be expecting more. They may be expecting perfection–they probably won't get it.

Where is it okay to make mistakes?

The most overlooked challenge in applying ML is selecting or designing the learning objectives. It is a very rare case where your model can be perfect. It will make mistakes, but which mistakes? This requires careful design. Unfortunately, this is something people often ignore.

Consider how mistakes are penalized during training. Typically every training data point is considered equal, but of course they are not. Identifying a big credit card fraud, for example, is much more important than identifying a small fraud. So we should probably treat the corresponding data points differently during training. We should probably penalize the model more for missing a big fraud than for missing a small fraud.

These choices have enormous real world effects but are invisible during the train/test process as we typically use the same approach to measure (and so penalize) performance on test data as we do on training data. Getting it wrong won't be visible until the system is deployed.

Where does the training data come from?

A classic mistake when applying ML is poor alignment of the training data with the deployment scenario. This is a bit technical, but almost all ML algorithms are predicated on the notion that their training data is drawn from the same distribution as the testing data. This can go wrong in a variety of ways.

Training data is often collected in some biased way. For example, in drug discovery research, successful experiments are published and unsuccessful ones are not. This leads to enormous bias in training that has a huge impact on the final performance of the system. This is a subtle issue that occurs frequently. We use the data we have available, but is the data right or just cheap?

Are you overfitting?

Last, but not least, we have overfitting. This is a subtle and deep challenge.

Roulette Machine Learning Tools

Overfitting happens due to 'multiple testing.' Imagine a thousand people playing roulette ten times each. Some of them will win and some of them will lose. Suppose we take the person who won the most money. They could have won a lot. Are they a great roulette player? Or are they just lucky? In this case we know that they are just lucky, but we only know that because we understand roulette. If our pool of people is large enough, we will always find someone who performs really well.

He was talking to one of the showgirls, who mentioned Nick the Greek. He was this famous professional gambler, and Feynman just couldn't work how you could have the concept of a professional gambler because all the games are stacked against you in Vegas. On talking to him he realized what was actually happening was Nick the Greek wasn't betting on the tables. His gambling strategy was making side bets with people around the table. He was almost playing off human flaws and human superstitions, because Nick the Greek had a very good understanding of the true odds.

If he made side bets at different odds he could kind of exploit that difference between the true outcome and what people perceive it to be. That's a theme that continues throughout gambling: If you can get better information about what's going to happen and you're competing with people who don't have much idea about how things are going to land or what the future might be, then that gives you a potentially quite lucrative edge.

There's a sense that being at the whim of chance is somehow a very human position. Admitting things as totally unpredictable and leaving yourself up to fate is often part of the allure for the nonprofessional gambler. But then there's a tension, because other people say maybe these are things that we can predict, and maybe this isn't as much up to chance as we imagine it to be.

It's really been this almost tug of war between believing something is skill and believing something is luck, whether it's in gambling or just in other industries. I think we have a tendency if we succeed at something to think it's skill and if we fail at something to almost blame luck. We just say, 'Wow, that's chance, there is nothing I can do about it.'

The work of a lot of people who study these games is trying to think of a framework within which we can measure where we are in terms of skill and in terms of chance. Mathematician Henri Poincaré was one of the early people, in the early 1900s, interested in predictability. He said that when we have uncertain events, essentially it's a question of ignorance.

He said that there are three levels of ignorance. Depending on how much information you have about the situation and what you could measure, things will appear increasingly random. Not necessarily because it's truly a lucky event, but really it's our perception that makes it appear unexpected.

One of the games that I think we expect to be least predictable is roulette. But as you point out, Claude Shannon, considered the father of information science, and Edward Thorp, who would later write one of the most popular books on card counting, made a strong case for being able to systematically predict roulette spins.

For a long period of time, roulette has almost been like a case study for people interested in random events.Early statistics was honed by studying roulette tables because you had this process that was seen as very complicated to actually understand fully, but if you collected enough data then you could analyze it and try and look for patterns and see whether these tables are truly random.

Your Crown Rewards membership is reviewed every six months on 1 April and 1 October. Your membership tier is based on the number of Status Credits earned in the six months prior to these dates. If you join between renewal dates you can be upgraded to the next membership tier at any time. Crown casino membership tier. Start Earning Points Today You can now start earning points every time you visit Crown. Enjoy the excitement of the casino, stay at one of Crown's world-class hotels or dine at award-winning restaurants simply show your card when you pay and you could earn points straight away.

Edward Thorp, who wrote while he was a Ph.D. physics student, realized that actually beating a roulette table, especially if it's perfectly maintained, isn't really a question of statistics. It's a physics problem. He compared the ball circulating a roulette table to a planet in orbit. In theory, if you've got the equations—which you do because it's a physics system—then by collecting enough data you should be able to essentially solve those equations of motion and work out where the ball is going to land.

The first wearable bit of tech was designed to be hidden under clothing so you could go into casinos and predict where the roulette ball will land.

Although in theory that could work, the difficulty is [that] in a casino, you actually need to take those measurements and perform those calculations to solve those equations of motion while you are there. So Thorpe then talks to Shannon, who was one of the pioneers of information theory and had all sorts of interesting contraptions and inventions in his basement. Thorpe and Shannon actually put together the world's first wearable roulette system computer. The first wearable bit of tech was designed to be hidden under clothing so you could go into casinos and predict where the roulette ball will land.

Those early attempts were mainly let down by the technology. They had a method which potentially could be quite successful. But it was implementing it, for them at that time—that was the big challenge.

You mention, though, a much more successful attempt in 2004.

This is the Ritz casino in London, where you have these newspaper reports of people whose roulette system initially said to have used a laser scanner to try and track the motion of the roulette ball. In the end, they walked away with just over a million pounds.

That's an incredibly lucrative take, even for high-stakes casinos like that. This [attempt] reignited a lot of the interest in these stories because, although Thorp and subsequently some students at the University of California had focused on roulette tables, they'd always left out a bit of their methods. They'd never published all the equations.

Sothere's always this element of mystery and glamour around these processes. Actually, it's only very recently that researchers in Hong Kong actually tested these roulette strategies properly andpublished a paper. It actually said, that if we have this kind of system for roulette it's plausible that we can take into casinos and win. I think there's been a number of other stories of gamblers trying out these techniques in casinos, but it's always been very secretive. It's interesting how long it's actually taken for some researchers to test this problem.

Toward the end of the book you talk about poker. If probability and physics are helping make even roulette seem ultimately predictable, poker seems a tougher nut to crack.

Exactly. Poker, on the face of it, seems like a perfect game for a mathematician because it's just the probability that you getthis card and someone else gets a card. Fun casino no deposit bonus code 2018. Of course, anyone who's played it realizes that it's much more about reading your opponents and working out what they are going to do and what they think you're going to do.

Early research into poker actually inspired a lot of the ideas of game theory: so, everything inA Beautiful Mind, about if players get together and try to optimize their strategy, they'll come up with certain approaches. In more recent years, that link between science and gambling has continued, and actually a lot of the attempts at A.I. are focusing on these kinds of games.

Although historically we've seen games like chess beaten—and more recently, Go—these are what's known as perfect information games. You have everything in front of you while you're playing. So in theory at least there's a set of moves that if you follow them exactly then you always get the optimal outcome.

If you've ever played tic-tac-toe: most people work it out pretty quickly. There's just a set of fixed things that you can do, and that will always force a certain outcome. Whereas with poker, in poker you can't do that because there's an element of randomness. There's hidden information in that you don't know what your opponent's cards are. You've got to adjust your strategy over time, and this is where things like bluffing and manipulating your opponents come into play.

I think for artificial intelligence that's a really interesting challenge, because you've got this hidden information and risk-taking aspects. Arguably, that's a lot closer to a lot of situations we actually face on a day-to-day basis. Whenever you go into a negotiation or you try to bargain for something, you've got information that you know, while they've got information that they know, and you have to adjust your strategy to account for the difference.

So much of our strategizing depends on accounting for having incomplete information. It reminds me ofBill Benter's horse-racing models. He models hundreds of variables to predict how the horses will run, but he also cautions about mistaking correlation for causation, especially when the correlations seem wildly counterintuitive. He's saying don't try to explain the models, as long as they work. That's what often happens with machine learning: we put a machine to work on some problem, feed it massive amounts of data, and it returns with these correlations that we never would have expected. They're totally counterintuitive, but we kind of just have to take them because they're revealingsomething.

I think the approach that these scientific bettors use has been really interesting. One of the questions that I found the most unexpected answer to was when I asked, 'What criteria do you used to make your strategy?' And with Bill Benter and these horse-racing syndicates, they're really just interested in which horses will win. They don't want to explainwhyit will win.They just want a model where if you put in enough information you will get a reliable prediction.

I think that goes against a common notion that somebody who's good at gambling is almost an expert and has a lot of knowledge of the narrative of the sport, whereas actually, a lot of these scientific teams treat it much more like an experiment. As long as it gives a good result, they don't mind how they get there.

And similarly with these bots, because you've got so much complexity in terms of how they learn—they have billions and billions of games against each other. It's very difficult to understand why bots might choose a certain strategy. This even goes back to the early days of machine learning, Alan Turing's question of can machines surprise us? Can they come up with something unexpected? Machine learning is increasingly showing that they can, because they can just learn so far beyond what their creators are capable of.

In many cases, these poker bots are turning up with strategies that humans would never have thought to attempt, because they've simply been able to crunch through that many games, and they've refined their strategies. It's a really interesting development we're seeing in terms of what the minimal amount of information or strategy is that you need to be successful. We really try to identify questions that maybe people wouldn't traditionally ask.

That brings us back to this notion that chance is both something that can't be explained away any further, and yet there's something deeply human about the desire to create a story to explain why things happen. Computers are now showing us strategies and explanations we never could have arrived at on our own; as you say, they're outpacing their creators. What are some of the ramifications of that process?

One of the things that really surprised me in writing the book is how quickly these developments are happening. Even the Go victories this year: I think lots of people didn't expect it to happen that suddenly. And likewise with poker: last year some researchers found the optimal solution for a two-player limit game. Now you got a lot of bots taking on these no-limits stakes games—where you can go all-in, which you often see in tournaments—and they're faring incredibly well.

In many cases, these poker bots are turning up with strategies that humans would never have thought to attempt.

The developments are happening a lot faster than we expected and they're going beyond what their creators are capable of. I think it is a really exciting but also potentially problematic line, because it's much harder to unpack what's going on when you've got a creation which is thinking much further beyond what you can do.

I think another aspect which is also quite interesting is some of the more simple algorithms that are being developed. Along with the poker bots which spend a huge amount of time learning, you have these very high-speed algorithms in gambling and finance, which are really stripped down to a few lines of code. In that sense, they're not very intelligent at all. But if you puta lot of these things together at very short time scales—again, that's something that humans can't compete with. They're acting so much faster than we can process information; you've got this hidden ecosystem being developed where things are just operating much faster than we can handle.

This goes beyond simply teaching bots to play poker or Watson winning atJeopardy!There are wider ramifications.

Yes. And I think the increasing availability of data and our ability to process it and create machines that could learn on their own, in many ways, it's challenging some of those early notions about learning machines. Even some of the criticisms and limitations that Alan Turing put forward when they were first coming up with these ideas, they're now being potentially surpassed by new approaches to how machines could learn.

You have these poker bots, instead of learning to play repeatedly, they're developing incredibly human traits. Some of these bots, people just treat them like humans: they refer to them in human terms because they bluff and they deceive and they feign aggression. Historically, we think of these behaviors as innate to our species, but we're seeing now that potentially these are traits you could have with artificial intelligence. To some extent it's blurring the boundaries between what we think is human and what's actually something that can be learned by machine.

Roulette Machine Learning Game

Machine learning (ML) and AI are still new. While the growth of open source tools and online courses have made them accessible to a large number of users, it's still hard to get them right. The growing population of novice users accessing these powerful tools means that many projects will fail.

So what questions should you ask yourself at the beginning to avoid having things go wrong?

Does it really solve a business problem?

Or is it just an opportunity to play with some cool technology? With exciting new technologies like Deep Learning for Computer Vision, the results are so cool that it's easy to get caught up in the technology itself rather than focusing on the value being provided.

What is 'good enough'?

While we may know what business problem we're tackling, we must also know what results are minimally sufficient. Is it reasonable to expect that we can achieve these results? If we need to get 99.9% accuracy before any value is provided, then perhaps this is not the right problem.

It's often the case that we're trying to automate some routine work that is currently being done by people. One often ignored benefit of applying ML to a project is the need to measure performance. As part of this effort, it is essential that we get a baseline from the humans currently executing the process. We should compare against this baseline rather than perfection.

Does the customer have reasonable expectations? Even if the resulting system is accurate enough to provide value, the customer may be expecting more. They may be expecting perfection–they probably won't get it.

Where is it okay to make mistakes?

The most overlooked challenge in applying ML is selecting or designing the learning objectives. It is a very rare case where your model can be perfect. It will make mistakes, but which mistakes? This requires careful design. Unfortunately, this is something people often ignore.

Consider how mistakes are penalized during training. Typically every training data point is considered equal, but of course they are not. Identifying a big credit card fraud, for example, is much more important than identifying a small fraud. So we should probably treat the corresponding data points differently during training. We should probably penalize the model more for missing a big fraud than for missing a small fraud.

These choices have enormous real world effects but are invisible during the train/test process as we typically use the same approach to measure (and so penalize) performance on test data as we do on training data. Getting it wrong won't be visible until the system is deployed.

Where does the training data come from?

A classic mistake when applying ML is poor alignment of the training data with the deployment scenario. This is a bit technical, but almost all ML algorithms are predicated on the notion that their training data is drawn from the same distribution as the testing data. This can go wrong in a variety of ways.

Training data is often collected in some biased way. For example, in drug discovery research, successful experiments are published and unsuccessful ones are not. This leads to enormous bias in training that has a huge impact on the final performance of the system. This is a subtle issue that occurs frequently. We use the data we have available, but is the data right or just cheap?

Are you overfitting?

Last, but not least, we have overfitting. This is a subtle and deep challenge.

Roulette Machine Learning Tools

Overfitting happens due to 'multiple testing.' Imagine a thousand people playing roulette ten times each. Some of them will win and some of them will lose. Suppose we take the person who won the most money. They could have won a lot. Are they a great roulette player? Or are they just lucky? In this case we know that they are just lucky, but we only know that because we understand roulette. If our pool of people is large enough, we will always find someone who performs really well.

With machine learning we typically have a large pool of possible models. Training involves explicitly or implicitly 'testing' those models against the training data and choosing the best. Now, is the best model actually good? Or did it just get lucky? That is the fundamental question of over-fitting. It is well studied and the answer is knowable. However, it does require some expertise to know.

Conclusion

Roulette Machine Learning Definition

ML and AI technologies are incredibly powerful. However, they can still be hard to apply. Many classic problems (definition of success, clear value proposition) are exacerbated in this context while some challenging new problems arise. The good news is that experience helps a lot. The bad news is that it's not always enough–ML and AI remain a field that often requires real expertise to achieve the results and business value that this technology can deliver.